Regression working example

Nick Fountain-Jones, Gustavo Machado

2022-01-12

Source:vignettes/Vignette_regression.Rmd

Vignette_regression.RmdGeneral introduction

MrIML is a R package allows users to generate and interpret multi-response models (i.e., joint species distribution models) leveraging advances in data science and machine learning. MrIML couples the tidymodel infrastructure developed by Max Kuhn and colleagues with model agnostic interpretable machine learning tools to gain insights into multiple response data such as. As such mrIML is flexible and easily extendable allowing users to construct everything from simple linear models to tree-based methods for each response using the same syntax and same way to compare predictive performance. In this vignette we will guide you through how to apply this package to ecological genomics problems using the regression functionality of the package. This data set comes from Fitzpatrick et al 2014 who were examining adaptive genetic variation in relation to geography and climate adaptation (current and future) in balsam poplar(Populus balsamifera). See Ecology Letters, (2014) doi: 10.1111/ele.12376. In this paper they used the similar gradient forests routine (see Ellis et al 2012 Ecology), and we show that MrIML can not only provide more flexible model choice and interpretive capabilities, but can derive new insights into the relationship between climate and genetic variation. Further, we show that linear models of each loci have slightly greater predictive performance.

We focus on the adaptive SNP loci from GIGANTEA-5 (GI5) gene that has known links to stem development, plant circadian clock and light perception pathway. The data is the proportion of individuals in that population with that SNP loci.

Lets load that data

###Parallel processing

MrIML provides uses the flexible future apply functionality to set up multi-core processing. In the example below, we set up a cluster using 4 cores. If you don’t set up a cluster, the default settings will be used and the analysis will run sequentially.

# detectCores() #check how many cores you have available. We suggest keeping one core free for internet browsing etc.

cl <- parallel::makeCluster(4)

future::plan(cluster, workers=cl)Running the analyis

Performing the analysis is very similar to our classification example. Lets start with a constructing a linear model for this data set. We set Model 1 to a linear regression. See https://www.tidymodels.org/find/ for other regression model options Note that ‘mode’ must be regression and in MrIMLpredicts, model has to be set to ‘regression’.

model_lm <- #model used to generate yhat

# specify that the model is a random forest

linear_reg() %>%

# select the engine/package that underlies the model

set_engine("lm") %>%

# choose either the continuous regression or binary classification mode

set_mode("regression")

yhats_lm <- mrIMLpredicts(X=X,Y=Y, Model=model_lm, balance_data='no', mode='regression', seed = sample.int(1e8, 1)) ## Balanced data= up updamples and down downsampled to create a balanced set. For regression 'no' has to be selected.

#save(yhats, file='Regression_lm') #always a good ideaModel performance can be examined the same way as in the classification example, however the metrics are different. We provide root mean square error (rmse) and R2. You can see that the overall R2 is 0.13 but there is substantial variation across loci in predictive performance.

ModelPerf <- mrIMLperformance(yhats_lm, Model=model_lm, Y=Y, mode='regression')

ModelPerf[[1]] #predictive performance for individual responses.

#> response model_name rmse rsquared

#> 1 CANDIDATE_GI5_108 linear_reg 0.05889193 0.035336124

#> 2 CANDIDATE_GI5_198 linear_reg 0.14942738 0.876430361

#> 3 CANDIDATE_GI5_268 linear_reg 0.10342546 0.760268726

#> 4 CANDIDATE_GI5_92 linear_reg 0.09045388 0.102442696

#> 5 CANDIDATE_GI5_1950 linear_reg 0.12408924 0.928373620

#> 6 CANDIDATE_GI5_2382 linear_reg 0.17132981 0.008165084

#> 7 CANDIDATE_GI5_2405 linear_reg 0.11245399 0.889209981

#> 8 CANDIDATE_GI5_2612 linear_reg 0.15102748 0.639031340

#> 9 CANDIDATE_GI5_2641 linear_reg 0.10743726 0.206594480

#> 10 CANDIDATE_GI5_33 linear_reg 0.18820927 0.452216116

#> 11 CANDIDATE_GI5_3966 linear_reg 0.23113000 0.049929111

#> 12 CANDIDATE_GI5_5033 linear_reg 0.09421835 NA

#> 13 CANDIDATE_GI5_5090 linear_reg 0.23540570 0.027992037

#> 14 CANDIDATE_GI5_5119 linear_reg 0.12486783 0.088733234

#> 15 CANDIDATE_GI5_8997 linear_reg 0.15711904 0.558054440

#> 16 CANDIDATE_GI5_9287 linear_reg 0.09695477 0.062483307

#> 17 CANDIDATE_GI5_9447 linear_reg 0.17059641 0.034493053

#> 18 CANDIDATE_GI5_9551 linear_reg 0.11730342 0.874519512

#> 19 CANDIDATE_GI5_9585 linear_reg 0.13759233 0.592627564

#> 20 CANDIDATE_GI5_9659 linear_reg 0.14800543 0.594642844

ModelPerf[[2]]#overall average r2

#> [1] 0.138497

p1 <- as.data.frame(ModelPerf[[1]])

#save as a dataframe to compare to other models.Lets compare the performance of linear models to that of random forests. Random forests is the computational engine in gradient forests. Notice for random forests we have two hyperparameters to tune; mtry (number of features to randomly include at each split) and min_n (the minimum number of data points in a node that are required for the node to be split further). The syntax ‘tune()’ acts a placeholder to tell MrIML to tune those hyperparamters across a grid of values (defined in MRIML predicts ‘tune_grid_size’ argument). Different algorithms will have different hyperparameters.See https://www.tidymodels.org/find/parsnip/ for parameter details. Note that large grid sizes (>10) for algorithms with lots of hyperparameters (such as extreme gradient boosting) will be computationally demanding. In this case we choose a grid size of 5.

model_rf <-

rand_forest(trees = 100, mode = "regression", mtry = tune(), min_n = tune()) %>% #100 trees are set for brevity. Aim to start with 1000

set_engine("randomForest")

yhats_rf <- mrIMLpredicts(X=X,Y=Y, Model=model_rf, balance_data='no', mode='regression', tune_grid_size=5, seed = sample.int(1e8, 1) )

#save(yhats, file='Regression_rf')

ModelPerf <- mrIMLperformance(yhats_rf, Model=model_rf, Y, mode='regression')

ModelPerf[[1]] #predictive performance for individual responses.

#> response model_name rmse rsquared

#> 1 CANDIDATE_GI5_108 rand_forest 0.02750271 0.04653376

#> 2 CANDIDATE_GI5_198 rand_forest 0.13776839 0.49146611

#> 3 CANDIDATE_GI5_268 rand_forest 0.04996621 0.68426963

#> 4 CANDIDATE_GI5_92 rand_forest 0.10788214 0.46016758

#> 5 CANDIDATE_GI5_1950 rand_forest 0.12804227 0.26383884

#> 6 CANDIDATE_GI5_2382 rand_forest 0.04503178 0.16194317

#> 7 CANDIDATE_GI5_2405 rand_forest 0.13677047 0.18307170

#> 8 CANDIDATE_GI5_2612 rand_forest 0.14764140 0.18637982

#> 9 CANDIDATE_GI5_2641 rand_forest 0.06876568 0.06608491

#> 10 CANDIDATE_GI5_33 rand_forest 0.14164495 0.12928938

#> 11 CANDIDATE_GI5_3966 rand_forest 0.13678949 0.20509703

#> 12 CANDIDATE_GI5_5033 rand_forest 0.05199386 0.09948352

#> 13 CANDIDATE_GI5_5090 rand_forest 0.10131552 0.86651928

#> 14 CANDIDATE_GI5_5119 rand_forest 0.04915427 0.10507785

#> 15 CANDIDATE_GI5_8997 rand_forest 0.13778847 0.24577111

#> 16 CANDIDATE_GI5_9287 rand_forest 0.04138992 0.08990481

#> 17 CANDIDATE_GI5_9447 rand_forest 0.04801897 0.05686854

#> 18 CANDIDATE_GI5_9551 rand_forest 0.13273004 0.46296239

#> 19 CANDIDATE_GI5_9585 rand_forest 0.13813631 0.29612228

#> 20 CANDIDATE_GI5_9659 rand_forest 0.12744068 0.22494079

ModelPerf[[2]]#overall average r2

#> [1] 0.09778868

p2 <- as.data.frame(ModelPerf[[1]])Plotting

You can see that predictive performance is actually slightly less using random forests (overall R2 = 0.12) but for some loci random forests does better than our linear models and sometimes worse. Which to choose? Generally simpler models are preferred (the linear model in this case) but it depends on how important to think non-linear response are. In future versions of MrIML we will implement ensemble models that will overcome this issue. For the time-being we will have a look at variable importance for the random forest based model.

VI <- mrVip(yhats=yhats_rf, X=X)

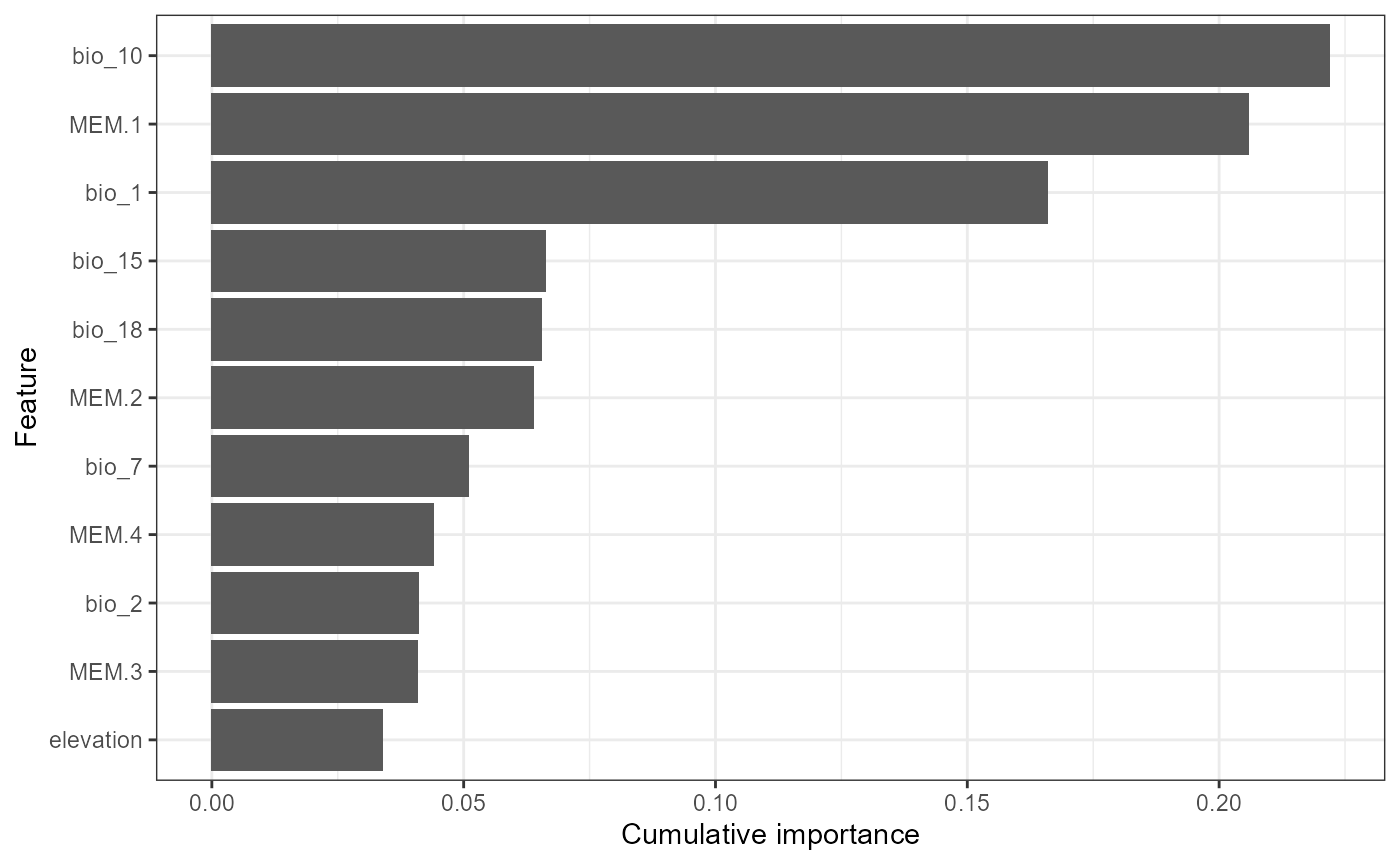

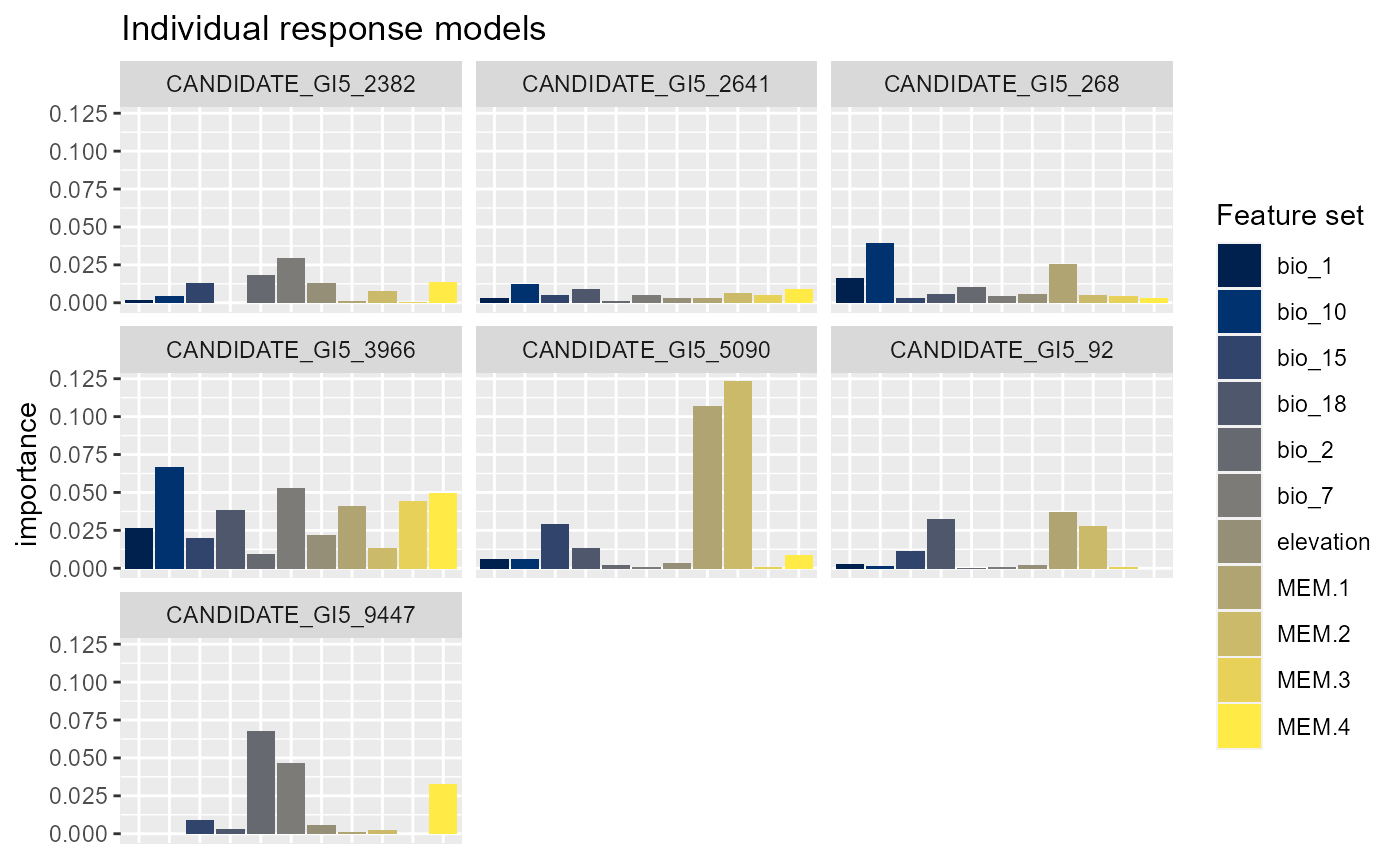

plot_vi(VI=VI, X=X,Y=Y, modelPerf=ModelPerf, cutoff= 0.1, mode='regression')

#> Press [enter] to plot individual variable importance summaries

Cutoff reduces the number of individual SNP plots presented in the second plot and ‘plot.pca=’yes’’ enables the variable importance scores to be analysed using principal component analysis (PCA) where SNPs closer in PCA space are shaped by similar combinations of features. You can see that bio_18 (summer precipitation), bio_1 (mean annual temperature) and bio_10 (mean summer temperature) are the most important features overall. Summer precipitation was not as important in Fitzpatrick et al but otherwise these results are similar. The second plot shows the individual models (with an r2 > 0.1, for your data you will need to play around with this threshold) and you can see for some SNPs bio_1 is more important whereas for another MEM.1 is more prominent.The PCA shows that candidate 5119, 9287, 5033 and 108 are shaped similarly by the features we included and may, for example, be product of linked selection.

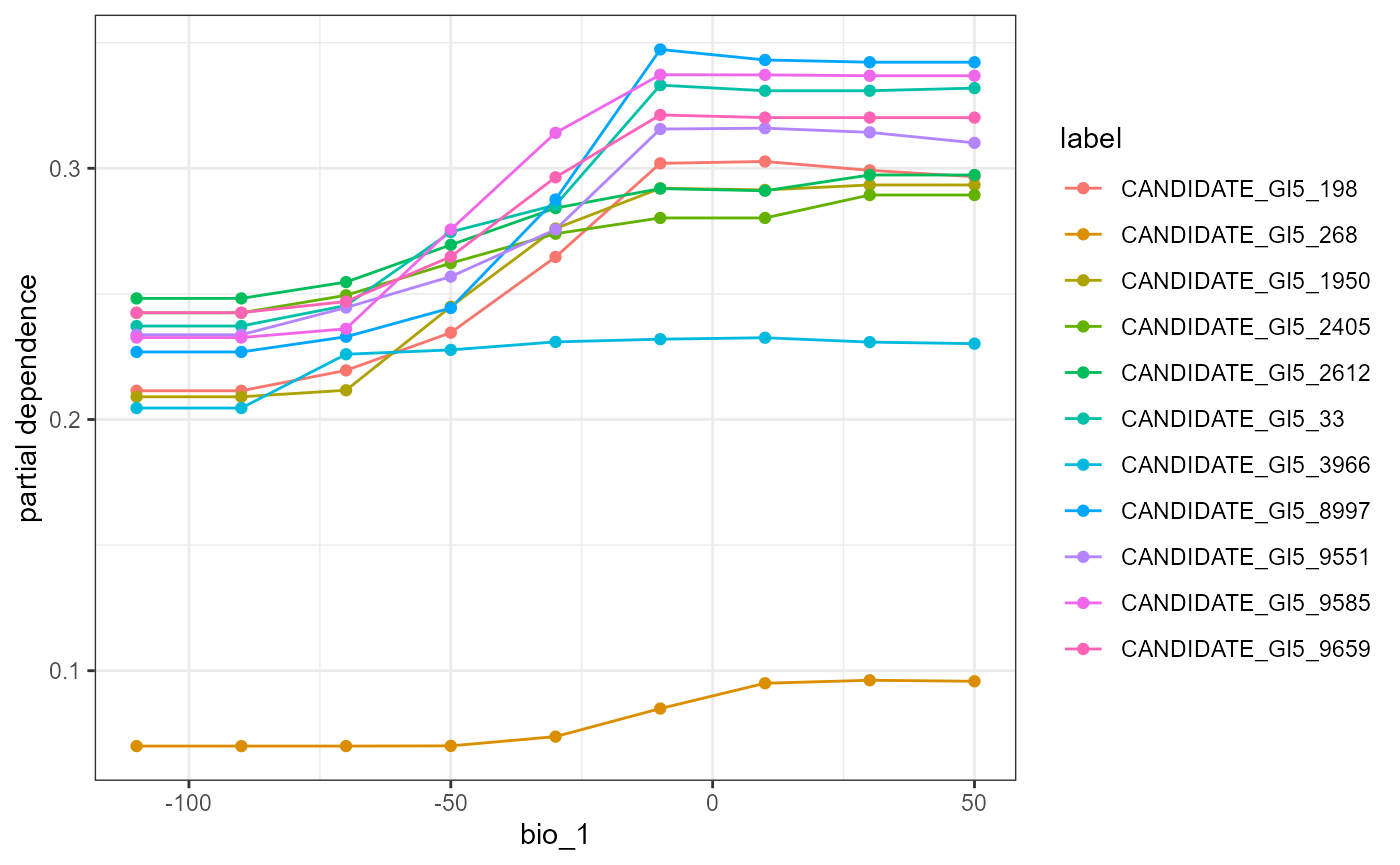

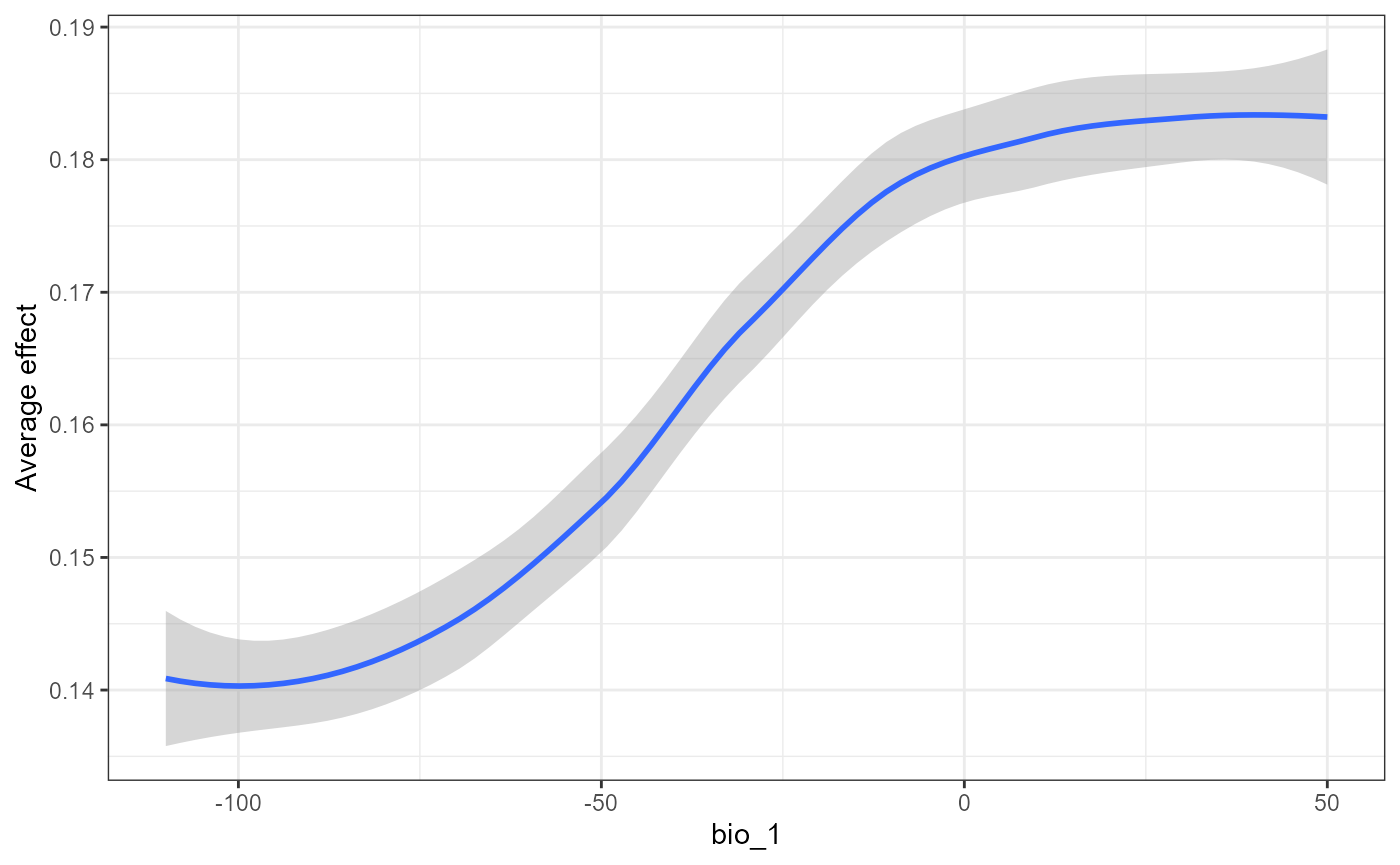

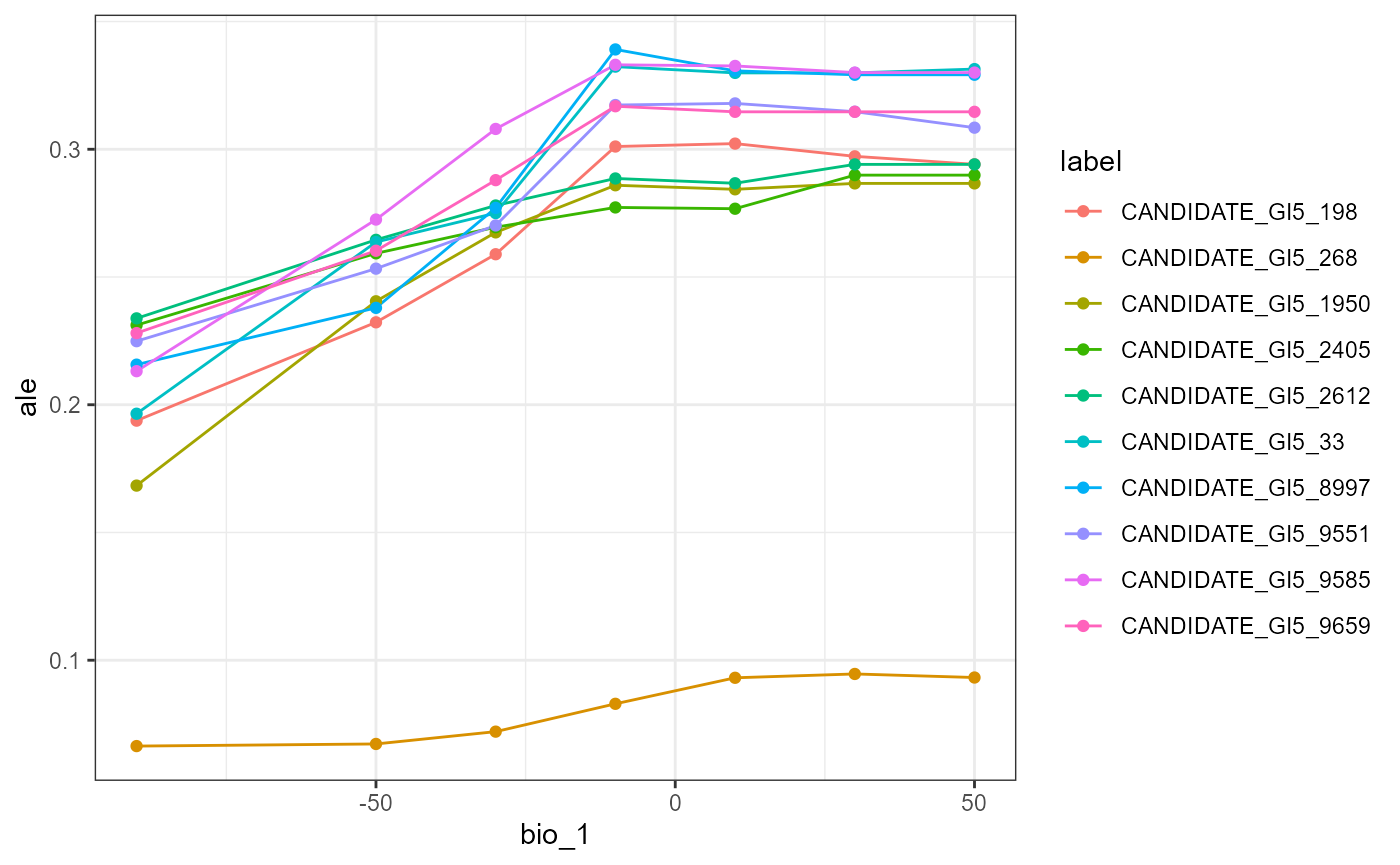

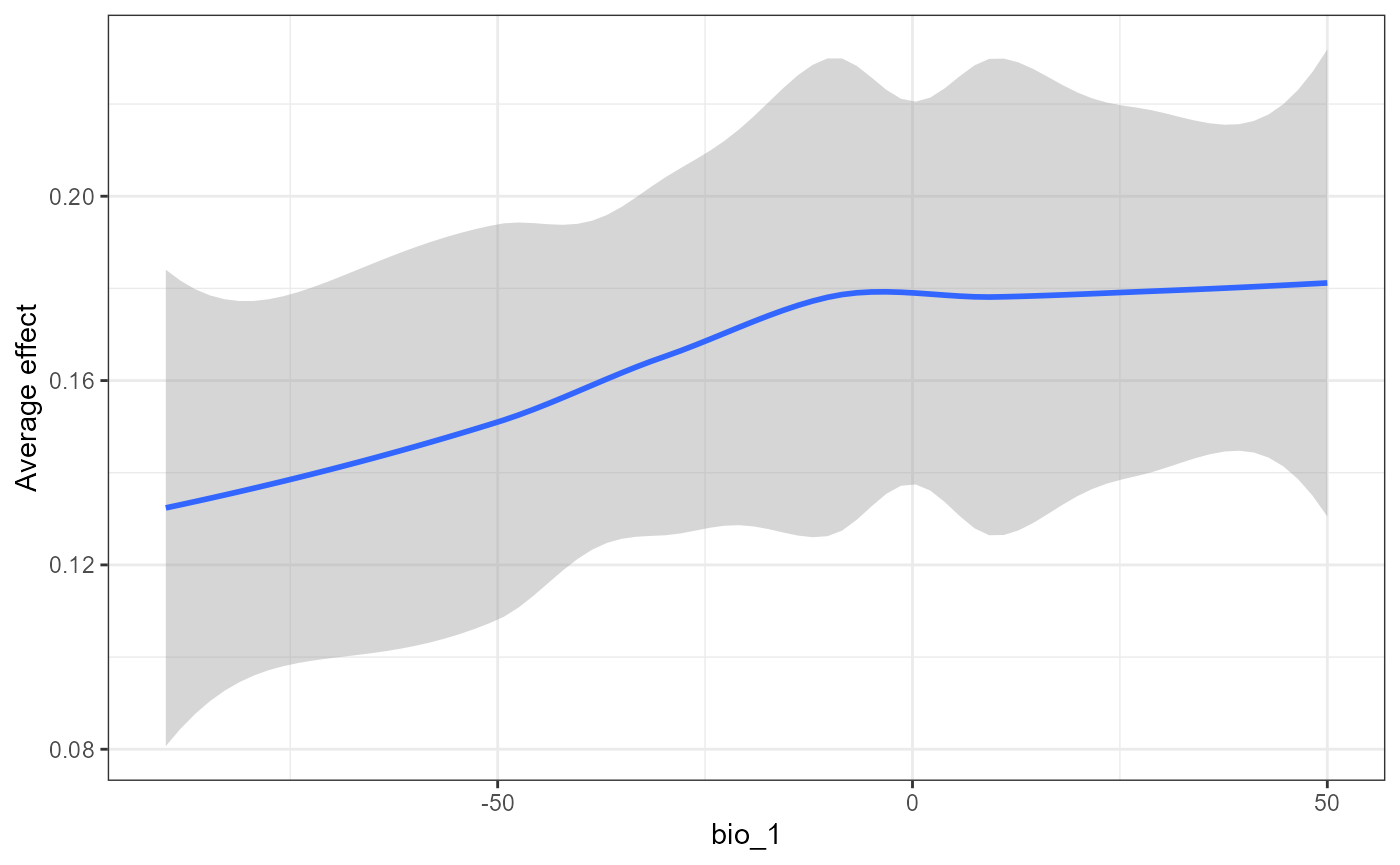

Now we can explore the model further my plotting the relationships between our SNPs and a feature in our set. Lets choose bio_1 (mean annual temperature) and plot the individual and global (average of all SNPs) partial dependency (PD) plots.

flashlightObj <- mrFlashlight(yhats_rf, X, Y, response = "multi", mode='regression')

profileData_pd <- light_profile(flashlightObj, v = "bio_1") #partial dependencies

mrProfileplot(profileData_pd , sdthresh =0.01)

#> Press [enter] to continue to the global summary plot

#> `geom_smooth()` using formula 'y ~ x'

The first plot is a partial dependency for all SNPs that respond to mean annual temperature. What we mean by respond here is that the prediction surface (the line) deviates across the Y axis of the PD plots. We measure this deviation by calculating the standard deviation and use that as a threshold (‘sd thresh=0.01’ in this case and this will differ by data set) to ease visualization of these relationships. The second plot is the smoothed average partial dependency of SNPs across a annual temperature gradient. This is very similar to the pattern observed by Fitzpatrick et al except with a slight decline in SNP turnover with mean annual temperatures > 0. Combined,you can see here only few candidate SNPs are driving this pattern and these may warrant further interrogation.

Lets compare the PDs to accumulated local effect plots that are less sensitive to correlations among features (see Molnar 2019).

profileData_ale <- light_profile(flashlightObj, v = "bio_1", type = "ale") #accumulated local effects

mrProfileplot(profileData_ale , sdthresh =0.01)

#> Press [enter] to continue to the global summary plot

#> `geom_smooth()` using formula 'y ~ x'

The effect of mean annual temperature on SNP turnover is not as distinct in the global ALE plot. This may mean that correlations between features may be important for the predictions.

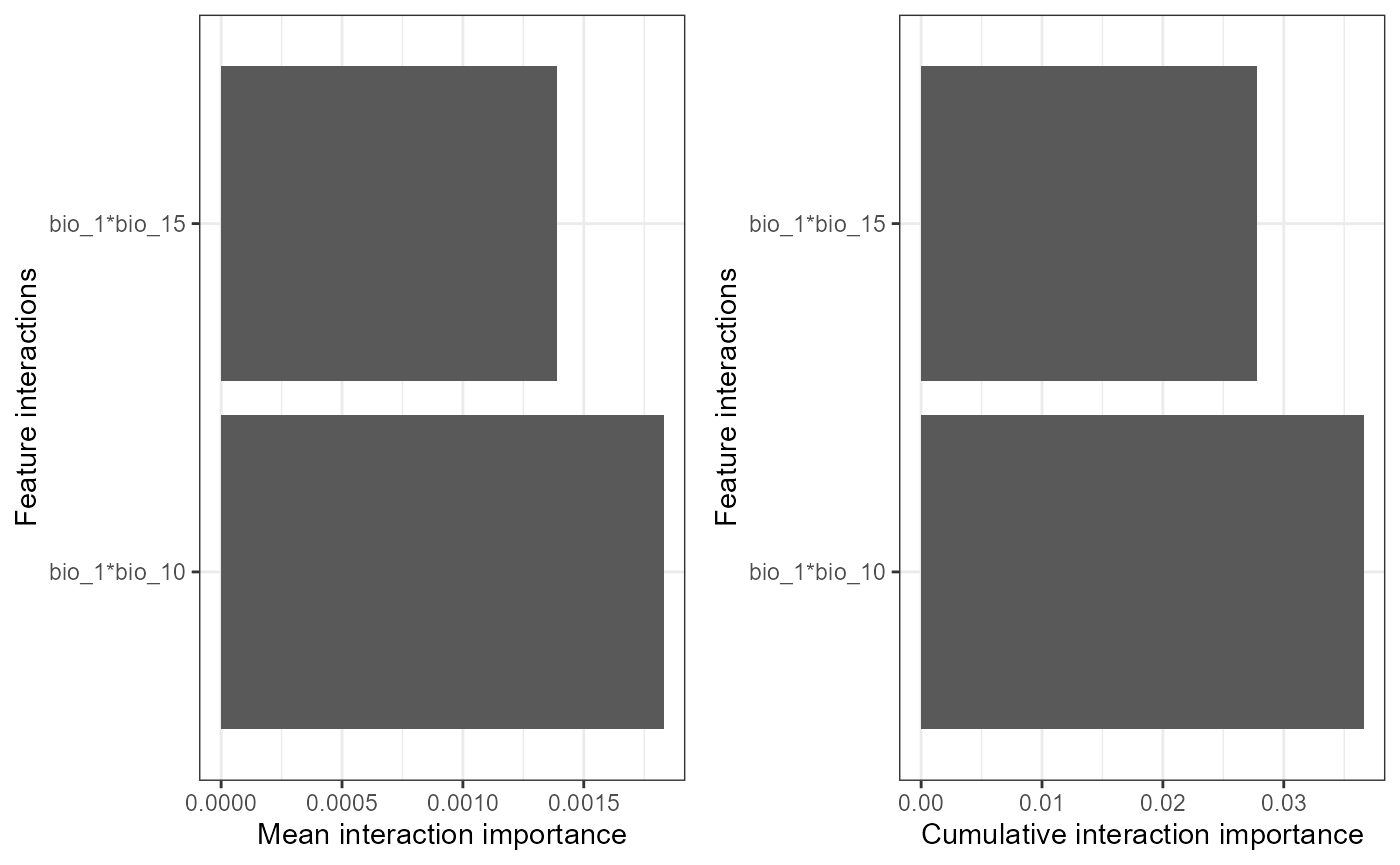

MrIML has easy to use functionality that can can quantify interactions between features. Note that this can take a while to compute.

interactions <-mrInteractions(yhats_rf, X, Y, mode='regression') #this is computationally intensive so multicores are needed.

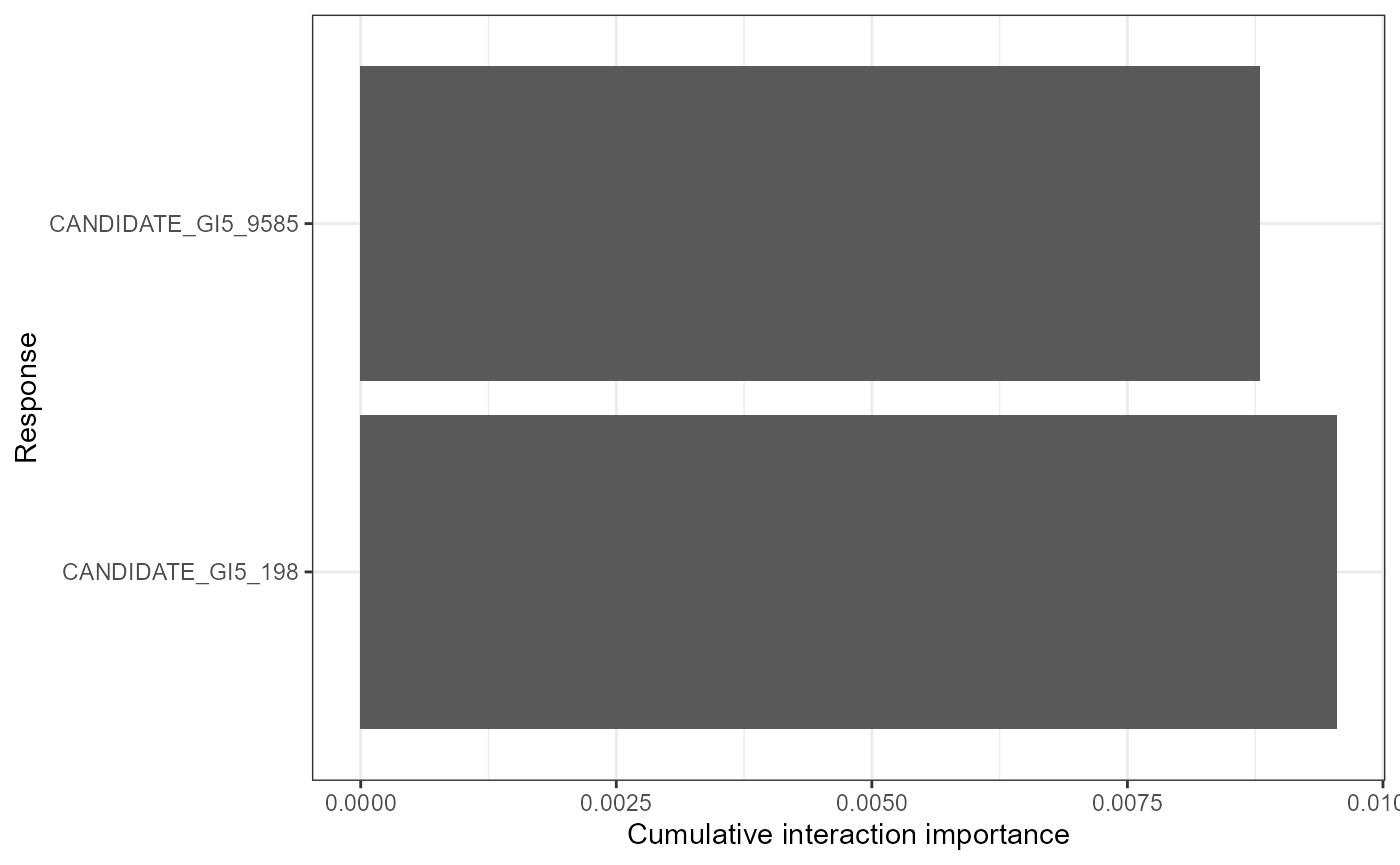

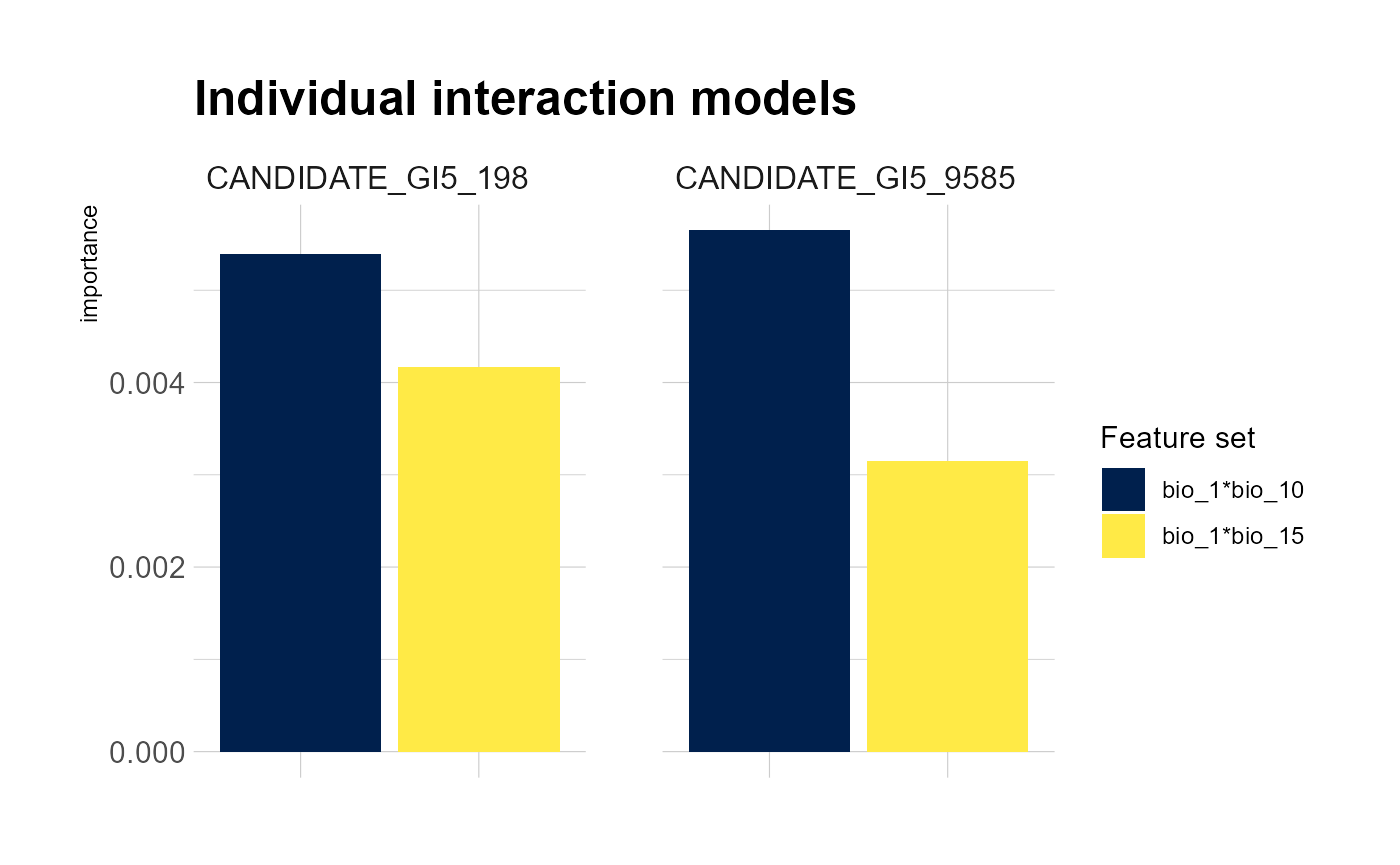

mrPlot_interactions(interactions, X,Y, top_ranking = 2, top_response=2) #can increase the number of interactions/SNPs ('responses') shown

#> Press [enter] to continue for response with strongest interactions

#> Press [enter] to continue for individual response results

#Make sure you save your results

#save(interactions, 'Fitzpatrick2016interactions')

#load('Fitzpatrick2016interactions')The first plot reveals that the strongest global interaction is between mean annual (bio_1) temperature and summer precipitation (bio_1/bio_15) but the interactions between other bioclim features are also relatively strong. Mean temperature interacts to some degree with spatial patterns as well (MEMs) to shape SNP turnover. Note that importance is all relative. the second plot shows that the predictions of candidate 33 are most effected by interacting features and the third plot shows that the interaction is between mean annual temperature and altitude.

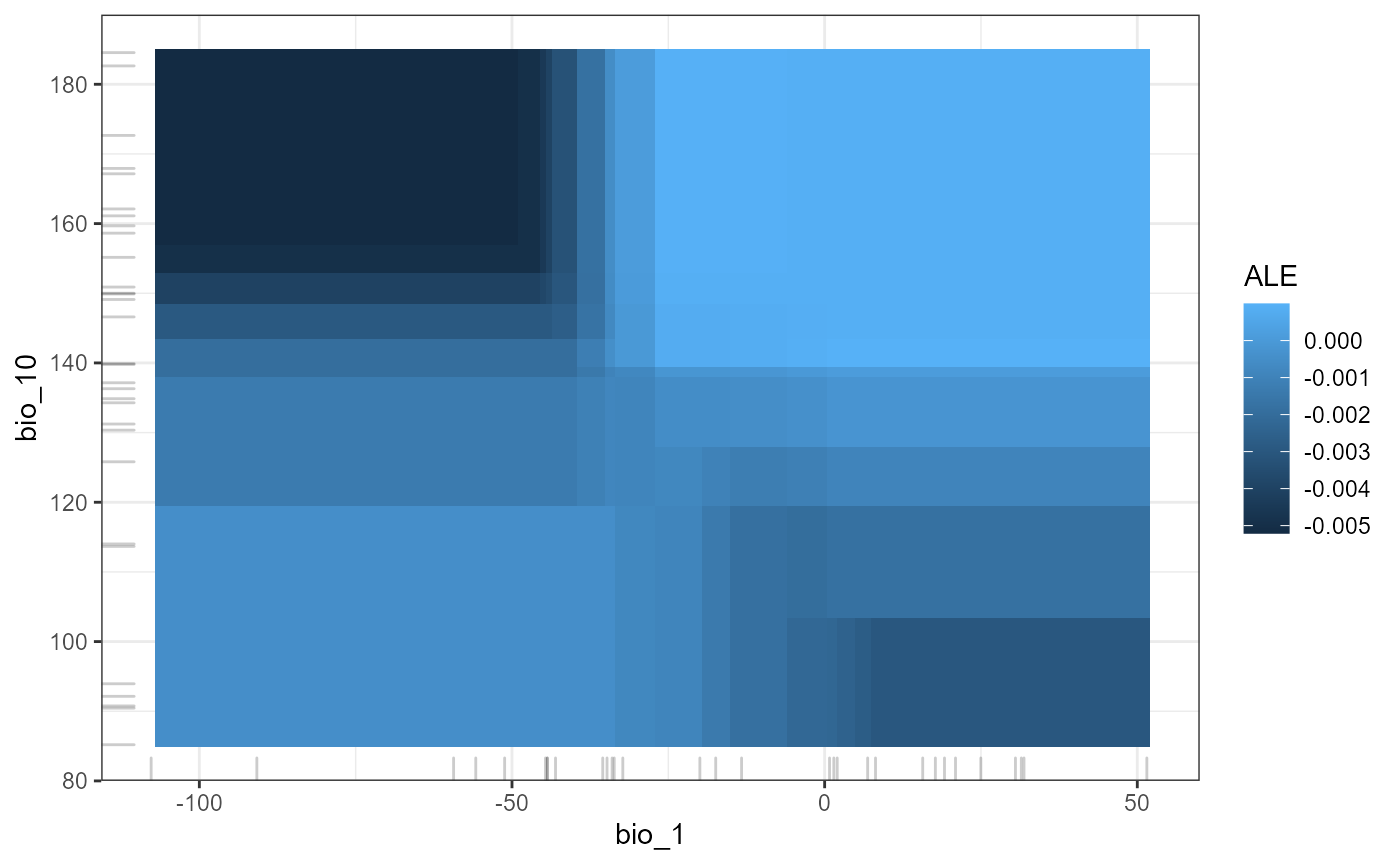

We have also provided functionality to visualize the impact of interacting feature pairs using ALEs or PDs. First we need to convert the mrIML predictor object into a form suitable for the IML package and then 2D plots can be constructed. We strongly recommend using ALEs as they a more reliable method for quantifying interactions (see Molnar (2018) for more details.

mrIMLconverts_list_reg <- MrIMLconverts(yhats=yhats_rf, X=X, mode='regression')

featureA = 'bio_1'

featureB = 'bio_10'

p2D <- mrProfile2D (mrIMLconverts_list_reg, featureA,featureB, mode='regression',grid.size=30, method = "ale")

#> Loading required namespace: yaImpute

plot(p2D) +theme_bw()

This is touching only the surface of what is possible in terms of interrogating this model. Both Flashlight and IML packages have a wide variety of tools that can offer novel insights into how these models perform. See https://cran.r-project.org/web/packages/flashlight/vignettes/flashlight.html and https://cran.r-project.org/web/packages/iml/vignettes/intro.html for other options.